FAQs

Here are some frequently asked questions about ServerAssistantAI:

Verification

I purchased ServerAssistantAI, how can I get verified on Discord?

After buying ServerAssistantAI from SpigotMC, BuiltByBit, or Polymart, follow these steps to get verified on our Discord server:

Join our Discord server.

Head to the

#verify-purchasechannel.Click on the button corresponding to the platform where you purchased the plugin (SpigotMC, BuiltByBit, or Polymart).

Fill in the required information.

Our team will review the information provided and confirm the purchase within 24 hours.

Please note that the current manual verification process is temporary, and we are working on implementing an automated system.

General Information

How does ServerAssistantAI compare to other AI-related plugins?

The main difference between ServerAssistantAI and other AI-related plugins is that ServerAssistantAI provides context-aware responses. With other plugins, you have to add each piece of information in a specific format, and all that information is sent to the AI API each time a question is asked, which increases cost and response times. ServerAssistantAI offers many different AI services including free models and the ability to have a file that can include hundreds of pages of information.

What are the costs associated with using ServerAssistantAI?

ServerAssistantAI offers both completely free and paid AI models. Free models, like the default CommandR+ model, uses Cohere's API and can be used without any cost. Paid models, like OpenAI's GPT-4o or Claude Haiku, have usage costs. We offer many different AI Providers with both free and paid options.

What are Embedding and Large Language Models, and how do they work in ServerAssistantAI?

An embedding model is a type of AI model that converts text data, such as the content in the documents/ directory, into numerical representations called embeddings. These embeddings capture the semantic meaning and relationships between different pieces of text. When a user asks a question, the embedding model is used to find the most relevant context from the files in the documents/ directory by comparing the embeddings of the question with the embeddings of the text in the files.

Once the relevant context is found, it is combined with the user's question and sent to the Large Language Model (LLM). LLMs are powerful AI models that can understand and generate human-like text based on the input they receive. The LLM processes the question and the context provided by the embedding model to generate an accurate and context-aware response.

ServerAssistantAI uses AI service providers through an API for both embedding models and LLMs. By default, the plugin comes with Cohere (for free models) and OpenAI (for premium paid models). We also offer many different built-in providers, as well as addons, downloadable through free addons, such as Anthropic's Claude, Google Gemini, Groq, etc. The Recommended Models wiki page shows the current recommended models for both free and paid options, depending on what users choose.

In summary, the embedding model helps find the most relevant information from the files in the documents/ directory, while the LLM uses that information along with the user's question to generate a helpful response.

Can ServerAssistantAI only answer Minecraft-server-related questions?

While the default configuration of ServerAssistantAI is designed to focus on Minecraft-server-related topics, the AI's knowledge and behavior can be easily customized by modifying the system prompt and the content of the files in the documents/ directory.

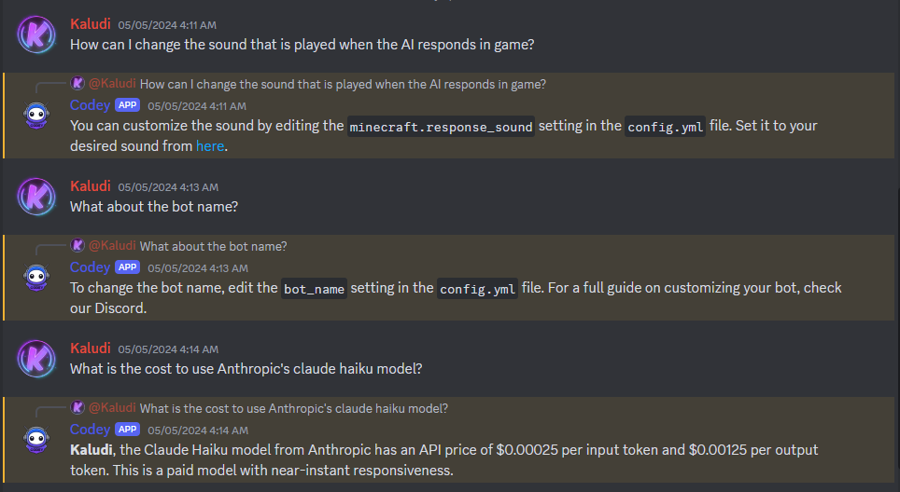

We have plugin developers who use ServerAssistantAI to provide their users with instant support and answers to questions related to their specific plugins. There are many different use cases available. Our Codey bot that is hosted on our Discord server is powered by ServerAssistantAI, and is used to answer user's questions regarding our plugins.

What versions of Minecraft are compatible with ServerAssistantAI?

ServerAssistantAI supports Spigot, Paper, Purpur, Folia, and similar server types, along with Minecraft versions 1.16 and above, 1.21.3 is fully supported. Velocity and Bungeecord support is planned but no ETA.

Can I use ServerAssistantAI without a Discord server?

Yes, ServerAssistantAI can be used without a Discord server. By default, the discord.enabled setting in the config.yml file is set to false. If you want to use the plugin only within Minecraft, you can leave this setting as is, and the plugin will function only for in-game interactions.

What are the best models to use with ServerAssistantAI?

ServerAssistantAI supports a variety of both free and paid embedding and chat models. Here are some of the best options:

Free Models

Embedding: Google-AIStudio's text-embedding-004 model has a 66.31 MTEB leaderboard score.

Embedding: Cohere's embed-multilingual-v3.0 model has a 64.01 MTEB leaderboard score.

LLM: Google-AIStudio's gemini-1.5-pro has 1301 ELO and around 57.2 Tokens per second. It requires the free Google AI Studio Addon.

LLM: Groq's lama-3.2-90b-text-preview has 1249 ELO and 300+ Tokens per second.

LLM: Google-AIStudio's gemini-1.5-flash has 1227 ELO and 133.3 Tokens per second. It requires the free Google AI Studio Addon.

Paid Models

Embedding: OpenAI's text-embedding-3-large has a 64.59 MTEB leaderboard score with $0.00013 cost per 1k tokens.

LLM: OpenAI's gpt-4o has 1361 ELO and around 49.97 tokens per second with $0.005 input and $0.015 output cost per 1k tokens.

LLM: OpenAI's gpt-4o-mini model has 1273 ELO with 166 tokens per second with $0.00015 input and $0.0006 output cost per 1k tokens.

LLM: Anthropic's claude-3-sonnet-20241022 model has 1282 ELO with 113.28 tokens per second with $0.003 input and $0.015 output cost per 1k tokens. It requires the free Anthropic Addon.

For more information on recommended models, check out the Recommended Models wiki page.

What format should I use for the documents in the documents/ directory?

We recommend using the Markdown (.md) format for your documents in the documents/ directory. Markdown helps the AI better understand the structure and context of the information, leading to more accurate and relevant responses. Additionally, using Markdown allows for clickable links in the AI's responses, which can enhance the user experience in both Minecraft and Discord.

How can I use a custom base URL with an OpenAI-Variant provider?

To use a custom base URL with an OpenAI-variant provider, follow these steps:

Set the

providerfield toopenai-variantin theconfig.ymlfile for either LLM and/or Embedding.Add the appropriate

modelname for the chosen provider.Add the

base_urlfield under the provider field and set it to your custom URL, without including/chat/completionsif it is an LLM and/embeddingsif it is an embedding model within the URL.Add the API key to the OpenAI-Variant section in the

credentials.ymlfile. If there is no API key, add random information to the API key field.Reload the plugin to apply the changes.

Usage and Features

How does the AI learn and generate responses?

All the text related to your server can be put into files within the documents/ directory. There is no specific format you have to follow, however, we have found that the Markdown (.md) format works well for helping the AI better understand the structure and context of the information, leading to more accurate and relevant responses. The AI doesn't "learn" from these files; instead, it scans the file with the generated Embedding Model results, finds the closest related chunks that are relevant to the question being asked, and sends that context along with the prompt request. The number of chunks and the relevance score required for a chunk to be considered related can be adjusted in the config.

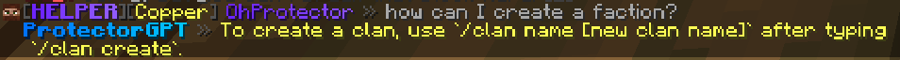

This is not a keyword-based search, thanks to AI embedding models, context from the files in the documents/ directory is pulled accurately, even if it includes completely different words. For example, let’s say in a document it states:

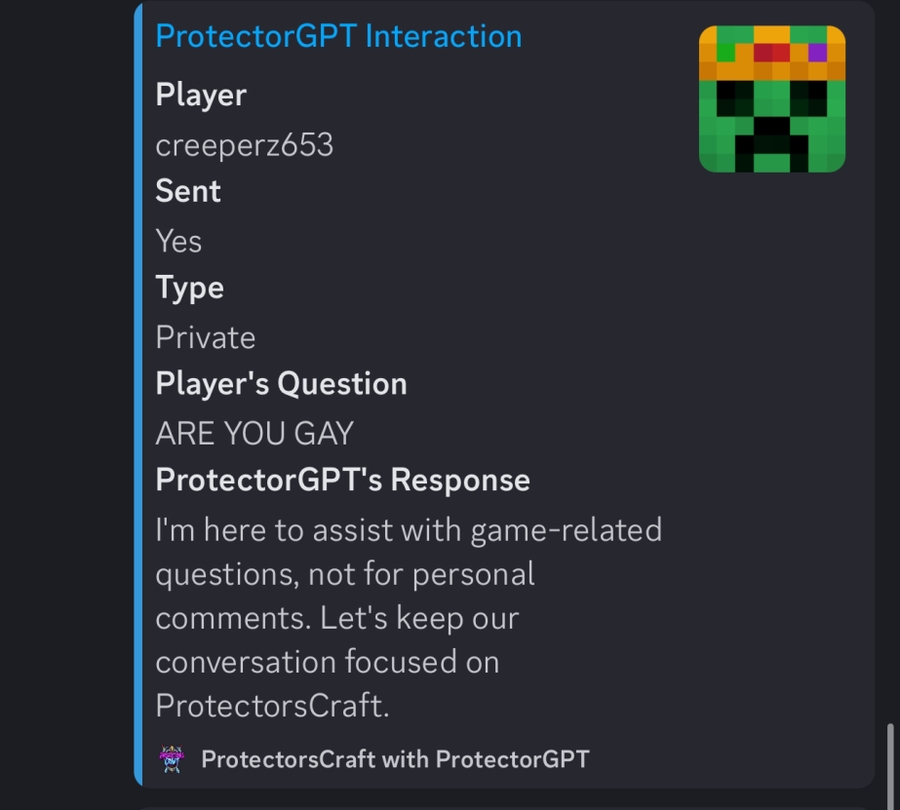

If someone asks “How can I create a faction?”, it will know the user is talking about the clan command and send the information for creating a clan (image attached below).

What types of files can I use for the AI's knowledge base?

ServerAssistantAI supports multiple file types for the AI's knowledge base. You can use various formats, including .txt, .md, .pdf, .docx, .pptx, and .xlsx files within the documents/ directory. This allows you to organize your server information more effectively and include a wider range of content for the AI to draw from when answering questions.

How can I customize the AI's responses?

Modify the files in the

documents/directory to include server-specific information, rules, and guidelines that the AI can use to generate accurate and relevant responses.Adjust the AI's persona and behavior by editing the

prompt-header.txtfiles in thediscord/andminecraft/directories.Customize the templates in the

information-message.txtandquestion-message.txtfiles to match your server's style and tone.

Can I set a limit to the number of questions players can ask the AI?

Yes, you can set a daily question limit for both Minecraft and Discord in the config.yml file. Look for the discord.limits and minecraft.limits settings and adjust them according to your preferences. ServerAssistantAI allows you to define multiple user groups with different daily limits:

For Minecraft, in-game users can be assigned to a specific group using the permission

serverassistantai.group.<group>.For Discord, users can be assigned to a group using either the role ID or role name in the config.

Can the AI's responses be sent privately to the player instead of globally in-game?

Yes, ServerAssistantAI comes with both public and private response options. Players can either ask a question publicly in chat or privately using /serverassistantai ask (question) . There is also an option in the config called send_replies_only_to_sender to send the question asked publically only to the player who asked the question instead of everyone in the public chat in-game.

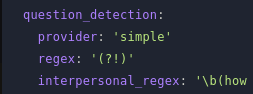

Can I disable question detection and only allow the AI to respond to the /serverassistantai ask or /serverassistantai chat commands?

If you want ServerAssistantAI to only respond to the /serverassistantai ask or /serverassistantai chat commands and not to questions in the chat, you can disable question detection in the config.yml file. To do this, set the question_detection.provider to 'none' for Minecraft. Alternatively, if you want to keep the question detection system but prevent it from matching any messages, you can add a regex under the question_detection section to a regex that doesn't match anything, like this:

regex: 'a^' or regex: '(?!)'

How can I prevent the AI from responding to certain messages on Discord?

ServerAssistantAI allows you to define a skip_keyword in the config.yml file. If a message contains this keyword, the AI will not respond to it. You can also specify skip_keyword_roles to restrict the use of the skip keyword to specific Discord roles.

For example, you can set the skip_keyword to a simple character like a period (.):

With this configuration, if a user with one of the specified roles sends a message containing a period (.), ServerAssistantAI will skip responding to that message. This can be useful when a user with the designated role wants to send a message in a channel monitored by the AI without triggering a response.

Will ServerAssistantAI be able to handle dozens of players asking questions concurrently?

Yes, ServerAssistantAI is fully asynchronous, and can process multiple requests concurrently from a large number of players without impacting the server's performance. The plugin utilizes asynchronous programming techniques to handle AI interactions in the background, allowing the server to continue running smoothly even when handling a high volume of requests.

How can I customize the messages displayed by ServerAssistantAI in Minecraft and Discord?

ServerAssistantAI comes with a messages.yml file that allows you to customize various messages displayed in both Minecraft and Discord. This file uses the MiniMessage format for Minecraft messages and Discord formatting for Discord messages. You can modify command responses, error messages, and other texts to match your server's style and language. Be sure to reload the plugin after making changes to the messages.yml file for the changes to take effect.

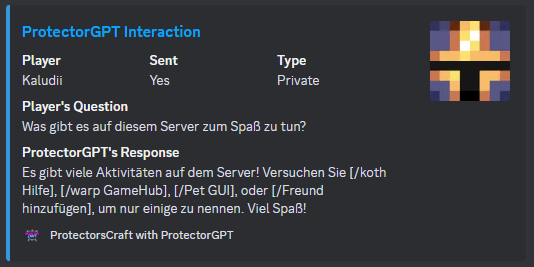

Can I use ServerAssistantAI with multiple languages?

Yes, if the model you choose is multilingual, players can converse with it in the languages it is trained on. You can explore different language models that support multiple languages and adapt the configuration files accordingly.

Can I use ServerAssistantAI on a proxy server (e.g., Velocity, BungeeCord)?

ServerAssistantAI currently is not compatible with proxy servers like Velocity or BungeeCord, but it is planned. There is currently no ETA on this.

Can I run multiple instances of ServerAssistantAI on the same server?

Running multiple instances of ServerAssistantAI on the same server is not supported and may lead to conflicts or unexpected behavior. If you need to run ServerAssistantAI on multiple servers, please install and configure it separately for each server instance.

Can I customize the format of the AI's responses on Discord?

Yes, ServerAssistantAI allows you to customize the format of the AI's responses on Discord using the discord.reply_format setting in the config.yml file. You can choose between plain text responses or rich embeds.

For plain text responses, you can use the following format:

The [message] placeholder will be replaced with the actual AI-generated response.

For rich embed responses, you can use the following format:

The embed object allows you to define various properties of the Discord embed, such as the color, description, footer text, and footer icon. The [message] placeholder within the description field will be replaced with the AI's response.

Note: Conversation history doesn't work with embed format. Use plain text if you need the AI to consider previous messages for context.

You can use our embed creator to visually design your embed and generate the corresponding configuration.

Can I use custom placeholders in the AI's responses?

Yes, ServerAssistantAI supports custom placeholders through integration with PlaceholderAPI. You can include placeholders in any of the .txt files to dynamically insert player-specific information such as username, rank, playtime, and more into the AI's responses. This allows for personalized interactions and leverages data from various other plugins.

Is there a way to have continuous conversations with the AI?

ServerAssistantAI includes the /serverassistantai chat command for Minecraft, which allows players to engage in a continuous private conversation with the AI. To start a chat session, simply use the /serverassistantai chat command in-game. The AI will then respond to your messages in a private conversation, maintaining the context across multiple interactions.

To leave the continuous chat mode, you can use the /serverassistantai chat stop command. This will end your current chat session with the AI, and any subsequent messages will be treated as regular chat messages or commands.

Can I see more in-depth information about the prompt, information, and history sent to the AI?

Yes, ServerAssistantAI provides an option to display a link to a paste containing helpful information within the AI's responses. By enabling the helpful_information.minecraft.enabled and helpful_information.discord.enabled settings in the config.yml file, the AI's responses will include a link to a paste that contains additional context about the response process, including the tokens used, response time, information, and history sent to the AI model.

You can also set helpful_information.minecraft.detailed and helpful_information.discord.detailed to true to include even more detailed information in the paste, such as the full prompt sent to the AI. This can be useful for understanding how the AI generates its responses and for debugging purposes.

Can I customize the interaction logs that are sent to Discord for both Minecraft and Discord?

Yes, you can customize the interaction logs sent to Discord for both Minecraft and Discord interactions using the JSON files in the interaction-messages/ directory:

discord-interaction-message.json: Defines the format for Discord user interactions.minecraft-interaction-message.json: Defines the format for Minecraft player interactions.

You can modify these JSON files to change the appearance and content of the interaction logs sent to designated Discord channels or webhooks.

Can I set minimum and maximum limits for questions?

Yes, you can set both minimum word and maximum character limits for questions in the config.yml file.

For the minimum word limit, look for the minecraft.minimum_words and discord.minimum_words fields. Adjust these values according to your preferences. By default, the minimum word limit is set to 3 for both Minecraft and Discord. Questions shorter than this limit will not be processed by ServerAssistantAI.

For the maximum character limit, look for the minecraft.maximum_characters and discord.maximum_characters fields. Adjust these values to your desired limits. By default, the maximum character limit is set to 500 for both Minecraft and Discord. Questions longer than this limit will not be processed by ServerAssistantAI

Can I add a new alias for the ServerAssistantAI commands in-game, such as /ask instead of /serverassistantai ask?

Yes, you can add new aliases for ServerAssistantAI commands in-game by modifying your server's commands.yml file. Here's an example of how to create an /ask alias for the /serverassistantai ask command:

In this example, the /ask command will be an alias for /serverassistantai ask, passing all arguments after the command ($1-) to the original command. After modifying the commands.yml file, restart your server for the changes to take effect.

Will ServerAssistantAI work with responding to gradient player chat messages?

Yes, ServerAssistantAI will work with gradient chat messages. The plugin uses the modern Paper chat event if it detects that it is available. This event provides a component instead of just a text string, allowing ServerAssistantAI to process and respond to gradient chat messages without any issues.

How can I configure the replying status animation in-game?

You can configure the replying status animation in-game using the following settings in the config.yml file:

minecraft.replying_status.type: The replying status animation. Supports TITLE, SUBTITLE, and BOSSBARminecraft.replying_status.bossbar_color: The color of the bossbar. Only effective when type is set to BOSSBAR.minecraft.replying_status.bossbar_duration: The time it takes for bossbar to show full progress. Doesn't affect actual response time. Only effective when type is set to BOSSBAR.

Technical and Setup

How do I enable the Discord interaction webhook?

To enable the Discord interaction webhook feature in ServerAssistantAI, follow these steps:

Open the

config.ymlfile located in theplugins/ServerAssistantAIdirectory.If you're using a bot, locate the

minecraft.channelsetting under the Minecraft Configuration section and replace the default value with the ID of a Discord channel.If you prefer using a webhook, find the

minecraft.webhook_urlsetting and enter your Discord webhook URL.Save the

config.ymlfile.Reload ServerAssistantAI using

/serverassistantai reload.

How can I customize the AI's name and avatar in Discord?

To customize the AI's name and avatar in Discord, you'll need to create a new Discord bot account and provide its token in the discord.bot_token setting in the config.yml file. You can then modify the bot's name and avatar through the Discord Developer Portal. Additionally, you can set a custom status for your bot using the discord.bot_status option in the config.yml file.

What should I do if the bot's responses don't make sense or include weird symbols and words?

If you notice that the bot's responses are not coherent or contain unusual symbols and words, it may be due to an incompatible prompt format for the selected AI model. Each model has its own specific prompt format requirements, and using the wrong format can lead to unexpected behavior.

To resolve this issue, follow these steps:

Check our Discord server for the prompt template specific to your selected model if available. We provide prompt format templates for many models in the

#saai-resourcesand#saai-addonschannels, which are accessible to verified users.If a template is available for your model, download it and replace the contents of the

prompt-header.txt,question-message.txt, andinformation-message.txtfiles in thediscord/andminecraft/directories with the corresponding sections from the template.If no template is available, consult the documentation provided by the model's creators for guidance on prompt engineering and configuration. Adjust the prompt files accordingly to match the recommended format for your model.

Please note that different models may require different adjustments to the system prompt to achieve the best results. Experimentation and fine-tuning may be necessary.

By default, ServerAssistantAI comes with a prompt format optimized for Cohere's Command R Plus model. If you decide to use another model, you will need to update the prompt format. For example, the default prompt format includes specific tokens such as <|START_OF_TURN_TOKEN|><|SYSTEM_TOKEN|> at the beginning of the prompt-header.txt file, <|END_OF_TURN_TOKEN|><|START_OF_TURN_TOKEN|><|USER_TOKEN|> in the question-message.txt and information-message.txt files. These tokens may need to be replaced or removed depending on the requirements of your chosen model.

Do I have to reset my configuration after a new update is released?

No, you don't need to reset your configuration when a new update for ServerAssistantAI is released. The plugin is designed to automatically update its configuration file (config.yml) with any new options or settings introduced in the latest version. Your existing configuration will be preserved, and you can simply adjust the new settings as needed.

Why aren't the Discord interaction logs being sent?

To ensure that ServerAssistantAI can send interaction messages to the designated Discord channel, make sure that your bot has the "View Channel," "Send Messages," and "Embed Links" permissions in that channel.

Can I use ServerAssistantAI on a modded Minecraft server?

ServerAssistantAI can be used with Minecraft servers running Spigot, Paper, Folia, or similar server software. While it may work with other server software, compatibility cannot be guaranteed. We plan on adding compatibility for Proxy software like Velocity and Bungeecord in the future.

How can I optimize ServerAssistantAI's performance on my server?

To optimize ServerAssistantAI's performance, consider the following tips:

Ensure your server meets the recommended hardware requirements for running ServerAssistantAI.

Keep your ServerAssistantAI version up to date to benefit from performance improvements and bug fixes.

Adjust the configuration settings in

config.ymlto fine-tune performance.Monitor your server's resource usage.

Can I use ServerAssistantAI with a different language models or AI providers?

ServerAssistantAI comes with Cohere & OpenAI language models by default, however we offer addons that include many other AI providers. If you have a specific language model or AI provider in mind that is not listed, please reach out to us on our discord server.

How can I create custom addons for ServerAssistantAI?

To create custom addons for ServerAssistantAI, you can use the plugin's API. You can find more information about creating add-ons in the Addons section of the wiki and the API reference documentation. Examples of addons that were created for ServerAssistantAI can be found on the SAAI-Addons GitHub repository.

Security, Privacy, and Hallucinations

Does ServerAssistantAI have a built-in censor or content filter?

Yes, the default configuration of ServerAssistantAI comes with a well-designed system prompt that keeps the AI's responses focused on Minecraft-related topics. This helps to prevent the AI from generating inappropriate or off-topic content. However, server owners can easily adjust the system prompt as needed to further customize the AI's behavior and ensure it aligns with their server's rules and guidelines.

Are the questions or context messages sent to CodeSolutions?

No, none of the questions or context messages are sent to CodeSolutions. They are directly sent to the AI provider chosen by the user.

Are there any AI hallucination issues?

There is a higher chance of hallucinations with open-source free models compared to paid models. However, it takes effort to make them hallucinate. If you choose a paid model like ChatGPT or Claude Haiku, the risk of hallucinations is much lower.

How do I report a security vulnerability in ServerAssistantAI?

If you discover a security vulnerability in ServerAssistantAI, please report it responsibly by emailing us at [email protected] or creating a ticket on Discord. We take security issues seriously and appreciate your help in keeping ServerAssistantAI safe for everyone. Please do not disclose the vulnerability publicly until it has been addressed by our team.

How does ServerAssistantAI handle player privacy?

We take player privacy seriously. ServerAssistantAI does not collect or store any personal player data other than anonymous usage data for bStats. The plugin only processes player messages and generates responses based on the provided configuration and server information.

For bStats, ServerAssistantAI collects anonymous usage data, including the AI model provider (e.g., OpenAI, Cohere) and whether Discord features are enabled. This data is aggregated and does not contain any personally identifiable information. You can view the collected data on the ServerAssistantAI bStats page.

If you would like to read our Terms and Services, it is listed on our website. You are also free to contact us for more information.

These FAQs cover a range of common questions and concerns that users may have about ServerAssistantAI. If you can't find an answer to your question here, feel free to reach out on our support channels for further assistance.